The Transformer limitation

While Large Language Models (LLMs) have been revolutionary, their reliance on the transformer architecture presents a ceiling. They struggle with complex, multi-step reasoning, require colossal amounts of data and computation to train, and their centralized nature creates significant privacy concerns. Simply making these models bigger is a path of diminishing returns, not a viable route to true machine intelligence.

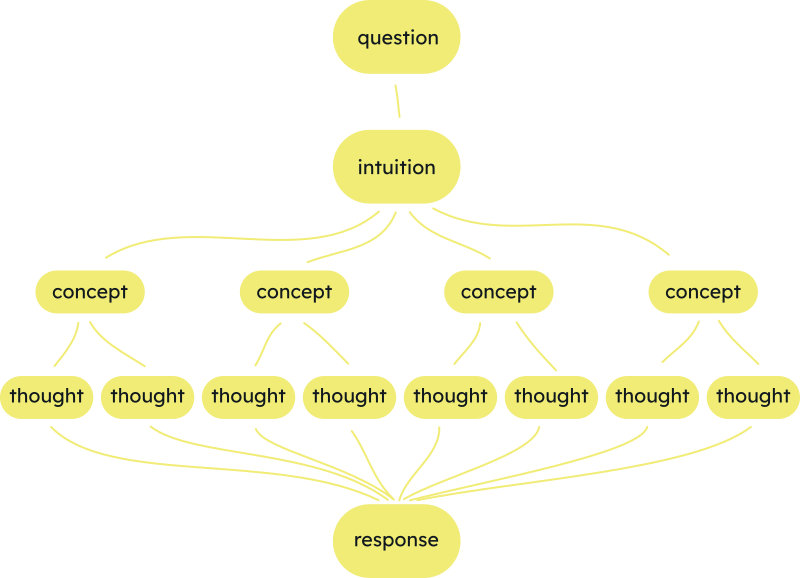

Our architectural edge

At Farang, we have moved beyond the transformer. Our architecture is inspired by principles of biological cognition, focusing on how information is processed, stored, and recalled. This allows our models to achieve a deeper contextual understanding and tackle specialized, cognitive tasks that are far beyond the reach of today’s LLMs.